Data Discovery – end of Alpha phase

Our Data Discovery Alpha is coming to an end in April. It is still possible to review the work we have undertaken so far on our blog post and we would love to hear your feedback.

As part of our end-of-phase work, we recently attended a Government Digital Services (GDS) workshop to review the progress of the Alpha. As the ONS website is a live service, it does not need to go through a service assessment, but we felt it was important to have a review from external experts to ensure we are doing the right things.

We took a number of things away from the few hours we spent with the GDS team.

Data Discovery

- It is a good project with all the right people doing the right things on it.

- We need to be careful in how we describe the project to make it clear which existing ONS sites this work will replace.

- Working in the open is important and not many teams do it to the extent (or as well) as we do.

High-level feedback

- Less is more. We need to make sure that simple things appear to be simple to our users.

- We need to continue to iterate all of the technology and UX choices on the project and test a wide range of ideas.

- Testing with real users and fake data is better than testing with fake users and real data.

The workshop looked at a few areas in more detail. These have been compiled into these brief notes and suggested some actions or next steps to take into the Beta.

Design and content

A need to ensure that we look up and explore as wide a range of design patterns and options as possible.

ACTION – Do more prototypes. Fake more things. Be prepared to fail even faster.

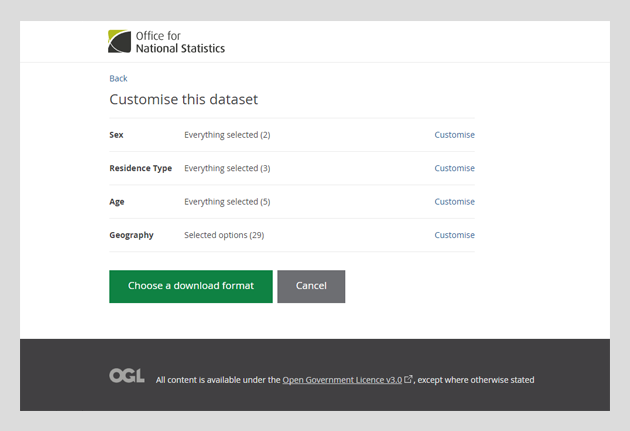

A need to ensure we allow users to feel supported with the actions they are undertaking at all times. All interactions should take place with a given context. Examples given were around upfront badging of the number of steps needed to customise data, clearer orientation of what each screen was doing, and how that contributed to a user goal.

ACTIONS – Labels are important. We can use them to help users understand the mental models we are asking them to have (for example, is the customising experience a linear check out, or hub and spoke interaction?)

The answer might not always be to download. If the answer is simple, do users just want that in the browser? Do they want to see a preview of the dataset in a browser, to see if it is the thing they were hoping for?

ACTION – Consider what an inline preview of a dataset could look like and what to do if a user customises to the extent that the answer is a single value.

User Research

Contextual research is really important. We need to remember that users will try and be helpful and give us an opinion even when they don’t know the subject.

ACTION – Ensure that when testing with subject matter experts, we have data available in the area they most understand.

The difference between data and digital literacy is an important point to keep in mind.

ACTION – Be aware of the need to assess a user’s digital skills as a separate characteristic than knowledge of data.

Accessibility testing. We need to ensure we can test with users with a wide range of access needs. Again, these also need to be users who would actually want to use the functionality to ensure we gain the most value.

Team

We need to think about multiple agile structures and models to ensure we continue to adapt. All teams should evolve over the life of a project. On projects like this, which use an in-sourced service team, it is especially important to build knowledge transfer in from the start.

ACTION – Keep being agile.

Technology

API keys and user registration are going to be an ongoing conversation. How do we balance the need to report on and manage our users if the API is totally open, versus the impact on user registrations we may see from the API being restricted?

ACTION – Continue to work with our users and the business to best understand how to balance this need.

The architecture must meet our specific Non Functional Requirements (NFRs). Our site has some very specific NFRs that we need to ensure we build for from the start. This is of particular importance when thinking about deployment processes and hosting models.

ACTION – Pick our technology solutions with care.

The API needs to get into the data. Feedback from the data.gov team reminded us to ensure that we treat API users as different to others. They are not going to always be data experts, so we have to ensure that they can get to the information they need as easily as possible. This means that we will need to consider operations like paging and follow the patterns of our existing API.

ACTION – Ensure the Beta scope captures the need to access data via the API.

It was good to hear such positive feedback from the GDS team and we are ensuring we continue to take action on the items identified for improvements. If you are interested in finding out more, please do get in contact.

The above feedback, positive user research and testing undertaken with over 1,200 people during the Alpha, and an agreement from the relevant ONS boards to do so, means we will now look to start the process of formally moving this project to a Beta phase.

5 comments on “Data Discovery – end of Alpha phase”

Comments are closed.

Put neighbourhood statistics back it is brilliant Why have you done this it is madness!!!

For Users wanting to extract data via API, rather than it being on the user to Fetch or Grab the data, and within a secure pipeline gsi.gov.uk for example, would it be reasonable for direct access to agreed metrics, or failing that agreement to update and push data onto receivers as a more efficient and effective way of ensuring users have the most up to date information available?

So disappointed to see this go. I want to be able to click in LLSOA, make my own area and then see the relevant stats such as population, health, etc for the defined area. Now there appears to be nowhere that I can do this. Will the new service help? I have read about it but it doesn’t really tell me what is does.

This really is disappointing. Accessing small area statistics and local data was so easy through the former platform.

Can you please inform me as to the platform I should use to access localised data sets, using a simple locations search to access the relevant data?

The neighbourhood statistics offered a date rich insight for young students into their local community. A data driven social analysis that they were amazed by and inspired to investigate further. Where will young people find this information in future? Information about the socio economic profile of where they live, this awareness is otherwise lost and so difficult to replace in this interactive way.