Understanding our users in eQ Alpha – Outcomes – Part 2

The following blog post is written by Ben Cubbon.

The eQ has come to the end of it’s Alpha and before we sprint ahead into the Beta wilderness we are reflecting on what we did in Alpha and what we have learnt. I am going to share with you, as the title cunningly suggests, what we did to understand what our users are doing when they come to create questionnaires or what they are doing as they complete our questionnaires. I’ll then share with you my reflections on what we’ve learnt about our users and also the lessons taken from how we understand our users.

Discovery

Our Alpha was preceded by a short Discovery, in which we distilled the following user types of eQ;

- Authors: Users who create, build, edit and maintain questionnaires which are used to gather data.

- Reviewers: Users who provide quality assurance and testing of questionnaires.

- Respondents: Users who complete questionnaires. They can respond for themselves or as a proxy for someone else or another entity (e.g. a business).

- Interviewers: Users who interview respondents to complete a questionnaire.

- Admins: Internal Users who will administer and support the live platform, providing a customer service and front line support.

The Respondents can be further distilled into different personas depending on the questionnaire being completed and the individual differences of respondents, however it was not the aim of this team to understand all the service needs of Respondents but to enable our Authors to create questionnaires that would meet the needs of Respondents.

The majority of our time in the discovery was spent interviewing and observing the users who would use eQ to create the questionnaires that Respondents would complete. At ONS we have many different teams who have an input on a questionnaire so it was vital that we spoke and understand what these teams did to understand what the eQ team would need to do.

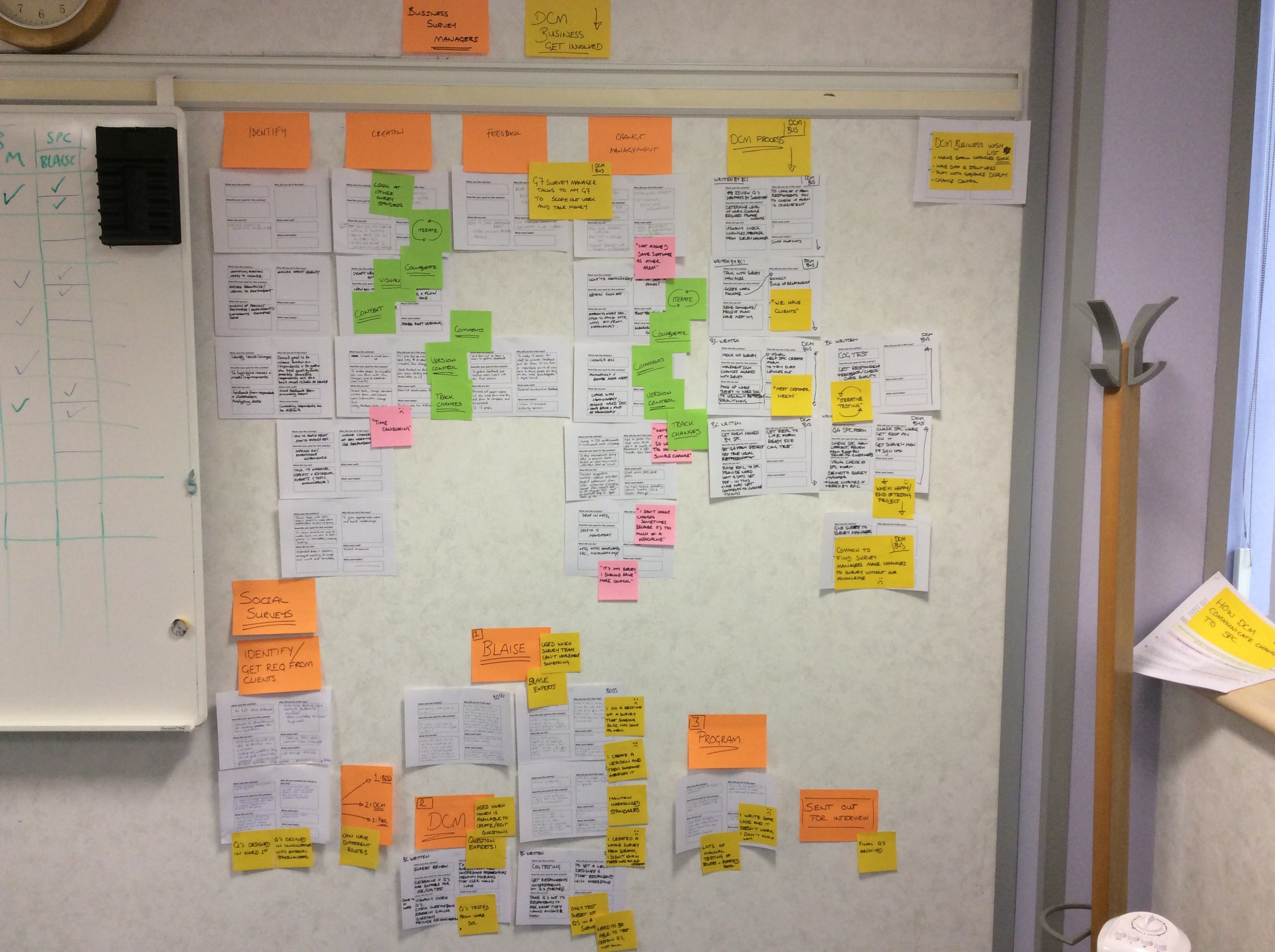

Experience map from our Discovery interviews with Authors

To understand our Authors, a combination of teams that come together in the current world to create or edit a questionnaire, an approach of experience mapping was adopted with users to create a visual map of their experiences when authoring a questionnaire. Users filled out experience cards for each point in the journey, these were then collated into a overall experience map of how a questionnaire is authored, what is done, and why things are done.

This approach proved very successful has it helped distill finer details from the authoring experience. Artefacts written by the users themselves were used directly in the dissemination of the findings.

In addition we spent time analysing past research conducted with Respondents to distill user needs that we could meet with features. The desk research was supplemented by getting out of the office to speak with members of the public to see what potential respondents behaviours and worries would be from an online questionnaire.

Alpha

In our Alpha we concentrated on understanding Authors, we knew the least about them and they posed the most challenging needs. An Author is a single user, however it’s a group of Authors that would come together to create a questionnaire, and continuously review and edit this questionnaire. From our discovery experience maps we saw the interaction from different Authors who bring different expertise which is great and something that we would need to continue to support. There were also frustrations apparent in Authors in the time it took for changes to be realised and a huge amount of effort was exerted to see changes from a Respondent’s point of view; this effort was to enable informed discussions about changes and to support testing of the changes with Respondents.

Author needs distilled from Discovery provided our first testing objectives in the lab, we took our Alpha prototype into our in-house usability lab and tasked Authors with editing questionnaires, creating new questions and previewing questionnaires. Lab based moderated usability testing provided an opportunity for the team to observe users using the eQ which in turn made findings from the research much easier to disseminate in the team, it also helped create a culture of asking ‘what would the user do?’ in the team. This isn’t without its difficulties though, observers can have a tendency to get too eager to fix an issue they’ve just observed so it’s good practice to set some observation rules such as only record what was observed and don’t create solutions straight away.

What we did observe as a team coming out of the lab sessions was that we were learning less and finding them less useful, this was down to our lack of upfront design which we aim to address in Beta. We were testing functionality but not designs that had been researched, this was evident from the decrease in task completion, confusion on labels and a decreases in SUS scores.

As a result we focused our research attention on understanding what our Authors did call things by running a series of open and closed card sorting. This method helped us not only understand the language that Authors use when creating/editing a questionnaire, but also (and perhaps more importantly) see from our Author’s point of view what elements of a questionnaire they think of together and separately. The biggest lesson I took from conducting the card sorting was that we should have run this with the Author’s earlier. Learning about how Authors structure questionnaires, and what elements they’re thinking of at what time is going to direct how we design in the Beta.

In the Alpha we started to experiment with different online usability testing software such as Optimal Workshop which was used for card sorting and click testing. Survey Monkey was used to get high numbers of responses to question we had of our users, but also was used to run a test of “What’s this called?” by showing screenshots of elements within questionnaires to Authors and they were able to provide a name. Finally, we played with Usability Hub but did not have a use for it. Optimal workshop is well documented as a useful tool to anyone wanting to conduct research on designs and Information Architecture, I share this view and have many plans for future use. Survey monkey is very well known, however as we are creating our questionnaire tool we hope that soon we will not have to use survey monkey. Usability Hub looked good, however Optimal workshop was easier to set up and I question the usefulness of some of the tasks that Usability Hub offer such as the preference test.

Beta

What then for the Beta? Most importantly to state is how we are changing our workflow as I’ve already mentioned the benefits we were taking from the lab diminished because functionality was preceding design partly due to the makeup of the skills in the team, this has been addressed. There is a good deal of chat on the internet about how design and research fit into an agile development cycle. Paul Boag talks about how UX has a problem with Agile because the whole user experience is not realised when delivering functions in sprints meaning, as we found in our Alpha that the initial design was not suitable as more functionality was added. Jim Bowes develops further on the issue of including UX in development sprints and proposes some fixes. We have taken the decision as a team to incorporate, with some slight adaptations, some of the fixes proposed (although I must confess I had only come across the article after we had set a vision out for our new workflow). The changes we are making include having a product planning, research, design and design testing working a few sprints ahead of the development sprints. Although, in the UX period we will not be working to sprints but work to a continuous flow (Kanban) as recognition that stories in the UX period do not follow a linear flow, but are cyclic so a design that is tested can flow back into design as a reaction from the findings. We’ll update you on how this works out for us as we will be continuously looking for ways to improve our workflow.

We are changing our focus at the beginning of Beta away from Authors to Respondents. Alpha for us was about learning about the most challenging user needs, that we achieved and now know our Authors much better. The focus for Beta is now to get a questionnaire up online and collecting real data therefore we will be focusing on Respondents, in addition focusing on Respondent needs will better help determine what is designed for Authors as they too will need to meet the needs of Respondents. Our aim for Respondents is to create the design patterns for how questionnaires will look and how the elements within a questionnaire will interact with one another. It will be up to our Authors to determine the content of the questions, guidance and error messages. Now our Respondents could literally be anyone, and I know this screams like get out of jail free card for not understanding your users properly but bear with me a minute. ONS http://www.ons.gov.uk/ons/about-ons/who-ons-are/index.html send thousands of surveys every year to businesses and members of the public, anyone could be asked to complete one of these surveys. In addition ONS are responsible for the Census the definition of which is to gather information of an entire population. It is the responsibility of our Authors to design questionnaire services that meet the needs of the Respondents of their specific survey, it is our responsibility to ensure that the elements within those questionnaire can be understood and used by all those Respondents. To do this we will be running usability research of our designs and developed product with members of the public sampled by device usage (smartphone, tablet and desktop), digital literacy (low unconfident to high very confident) and digital accessibility. This will enable us to be certain that the elements of the eQ will be suitable for any ONS Respondent. To support this research we will be growing a mobile usability lab that is equipped with mobile phones, tablets and laptops. Having a mobile usability lab will mean that we can go to the place where our users are and recruit those users who may be put off from the idea of coming into a Government building, which comes with security checks and lengthy procedures, or into city centre digital usability labs. The hope is that a mobile lab will provide us richer and more true to live findings.

And that’s all for part 2…

2 comments on “Understanding our users in eQ Alpha – Outcomes – Part 2”

Comments are closed.